Autonomous Drone with Visual SLAM and Path Planning

Simulation and Real Time Implementation of Visual SLAM in an Indoor Environment using a Quadcopter

| Team: Anil Kumar Shah, Bibek Yonzan, Lucky Babu Jayswal, Samim Khadka | |

| Timeline: 1 year | Technologies: ROS Noetic, Gazebo, PX4, C++, Docker, RTABMap |

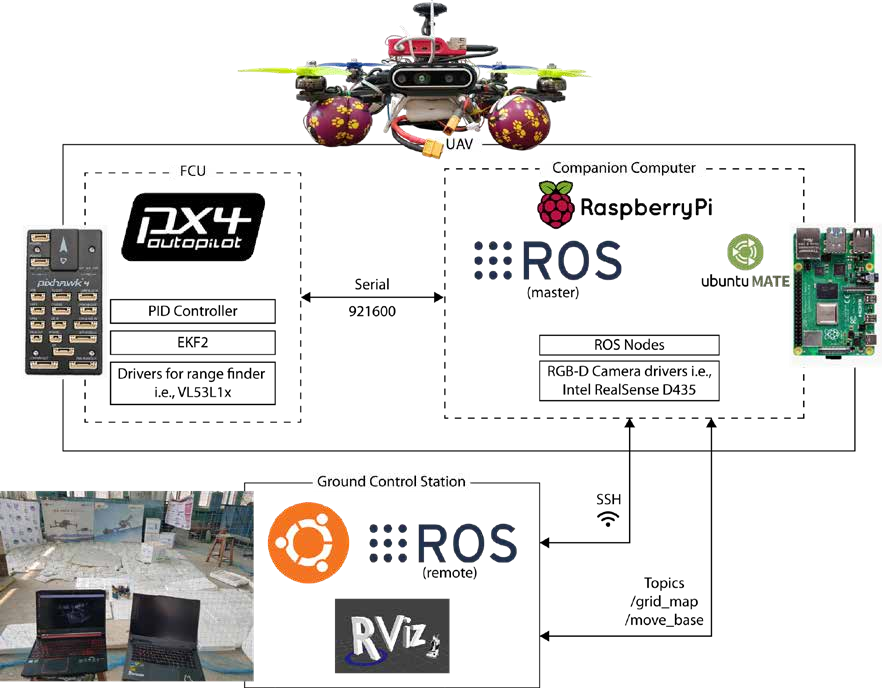

The goal was to implement a visual SLAM algorithm, in a drone, that enabled autonomous flight in an indoor environment with degraded GPS signals. Indoor autonomous navigation provides unique challenges: GPS denied environments, dynamic obstacles, and a need for real time mapping and localization. Our solution was to use a RGB-D camera with a Pixhawk 4 as the flight controller and a Raspberry Pi 4 as the flight computer to perform localization and mapping the environment, for obstacle free autonomous navigation.

We developed a comprehensive autonomous system with RTAB-Map integrated with a PX4-Raspberry Pi flight stack, achieving reliable indoor navigation with 7.7 cm accuracy.

| Technical Implementation |

- RTAB-Map implementation: Configured and optimized for quadcopter platforms with an RGB-D sensor

- Height sensing: Configured a LiDAR to detect the height of the quadcopter from the ground while in flight

- Loop Closure Detection: Fine tuned parameters for place and marker recognition in indoor environments

- Path Planning Implementation: Implemented a global and local planner for autonomous navigation

- Global Planner: Configured the A* algorithm for global obstacle avoidance and path planning

- Local Planner: Configured Regulated Pure Pursuit (RPP) for local path planning and adjustments

- PX4 Parameter Tuning: Adjusted the PX4 parameters for visual inertial odometry and GPS-denied environments

- Sensor Fusion: Integrated visual inertial odometry using an RGB-D sensor (Intel D435) and the PX4 IMU for state estimation

| Results and Performance |

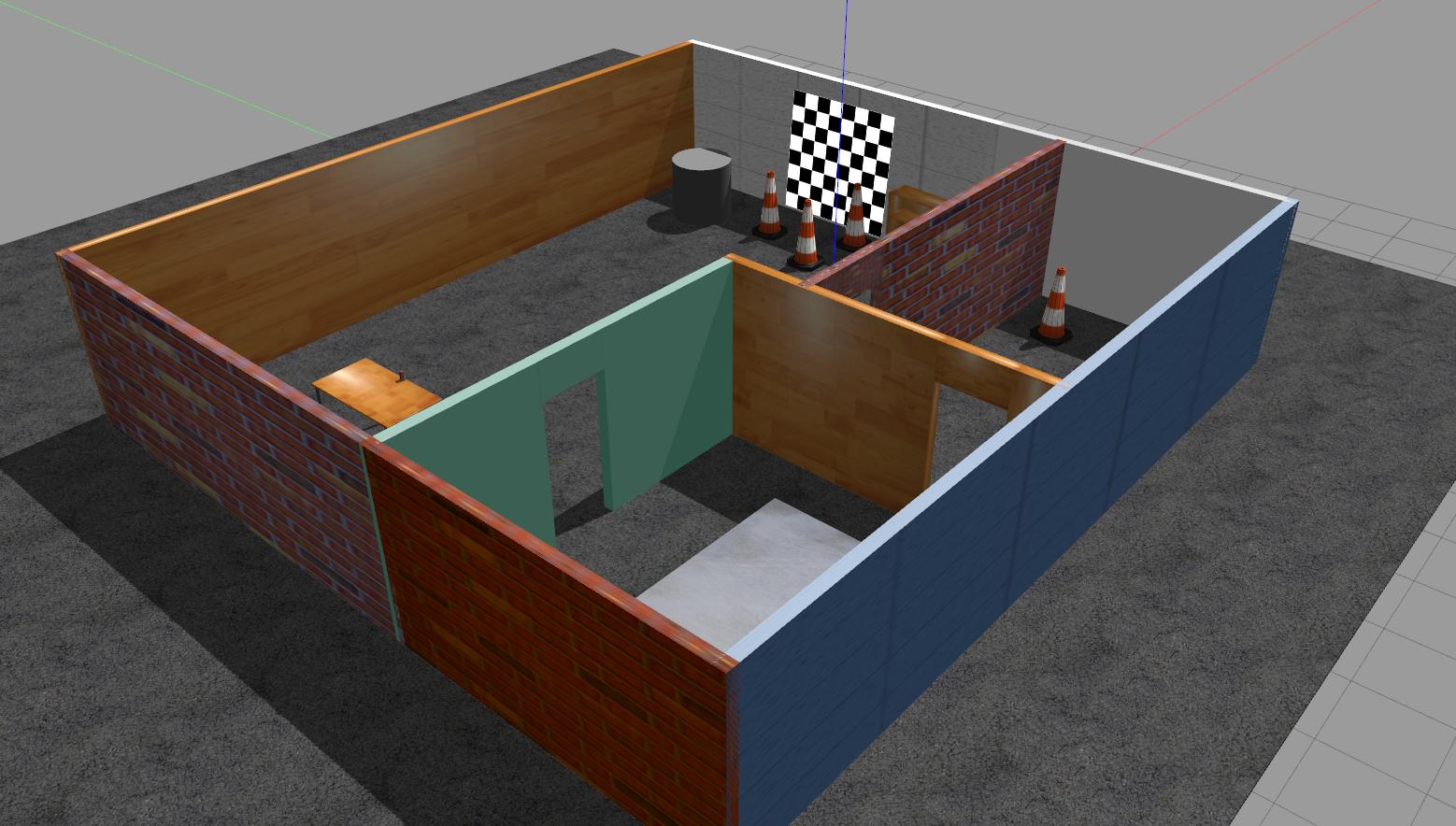

The quadcopter was tested in a variation of 3 different environments: a netted cluttered environment, two different live rooms with objects, and the actual outside world. The indoor environment created was a 35 x 25 feet with nets on all four sides. It also contained boxes acting as obstacles, in various combinations of positions.

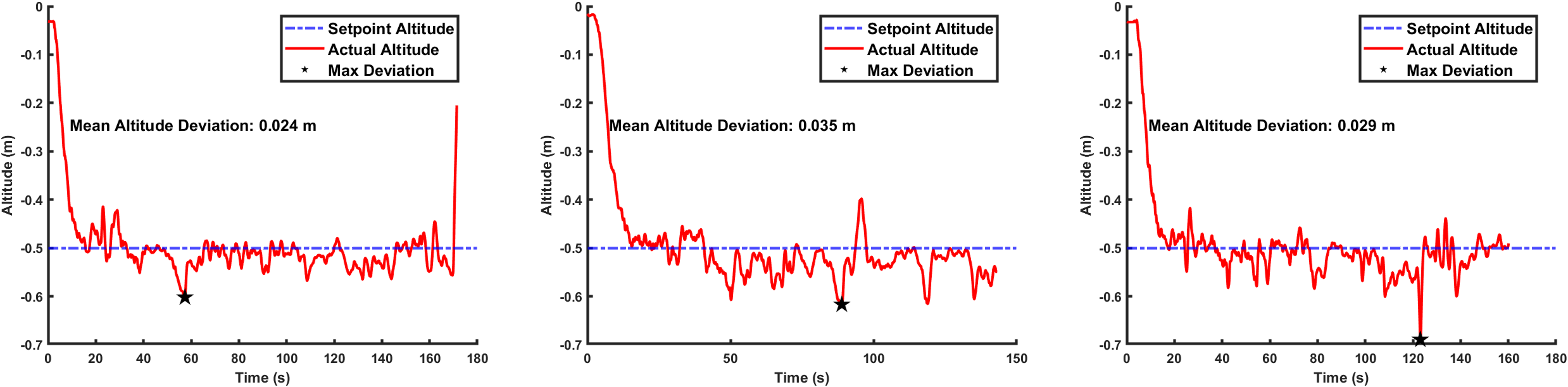

The indoor environment had three different configurations: no obstacles (i.e., boxes), single obstacle and two obstacles. Initial height at which the drone was flown is 0.5 m off the ground. The results for each of the configuration are:

| Configuration | Mean Altitude Deviation |

| Zero obstacles | 0.024 m |

| Single Obstacle | 0.035 m |

| Two Obstacles | 0.029 m |

From the test flights, a 3D point cloud map was also obtained. Following is a point cloud map for the 2 obstacles configuration.

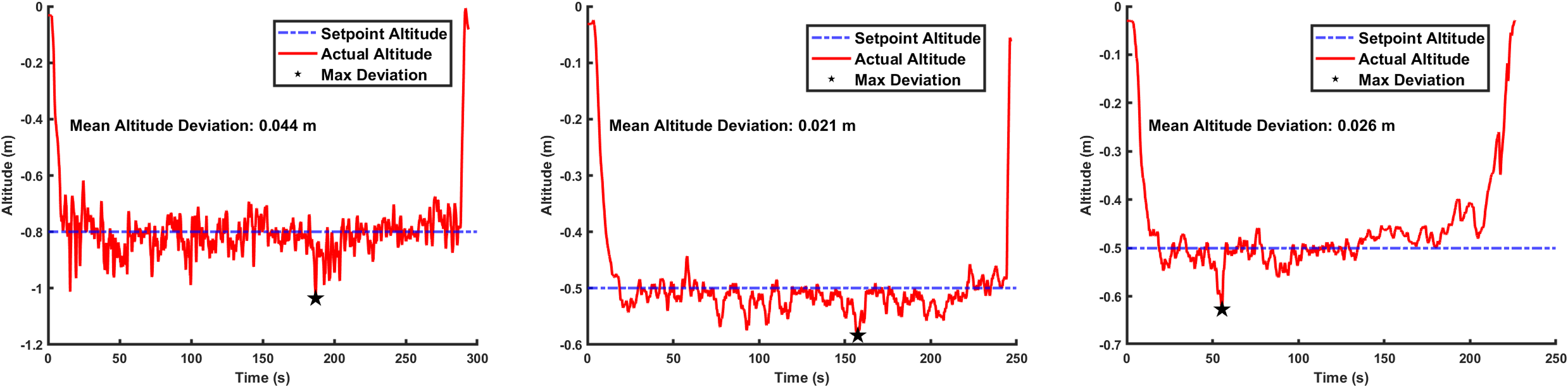

Next, two different “real” world environments were selected to mimic operations in the real world. A garden before the CES building in our campus, the senior year classroom, and the manufacturing laboratory.

The results and the subsequent plots and point cloud maps are:

| Environment | Mean Altitude Deviation |

| Garden | 0.044 m |

| Senior Classroom | 0.021 |

| Manufacturing Lab | 0.026 m |

| Further Work and Issues |

- The processing power could be improved. Instead of a Raspberry Pi, a Jetson Nano or an Intel NUC could be used. The processing power was one of the major bottlenecks in this project, and a GPU powered, or a simply more powerful CPU could boost this project tremendously.

- Another major issue faced was during sensor fusion. Partially due to the processing limitations of the onboard computer as well as the mismatch between the polling rates of the image sensor and the IMU. A camera with an IMU unit could be used to replace the main sensing unit, thus reducing the dependance on external sensor fusion.

- Due to economical limitations, ground truth could not be measured. As such proper quantitative analysis could not be done. Installing motion capture capture cameras could help gather ground truth data, thus making the results and analysis further meaningful.

| Flight Test Videos |

This is one of the first test flights, here we are trying to test out offboard controls on the drone for the first time.

This is one of the tests at the indoor environment at IIEC.

This is the one of the final test flights, this one is from the senior classroom environment. The whole final test flights can be found here.